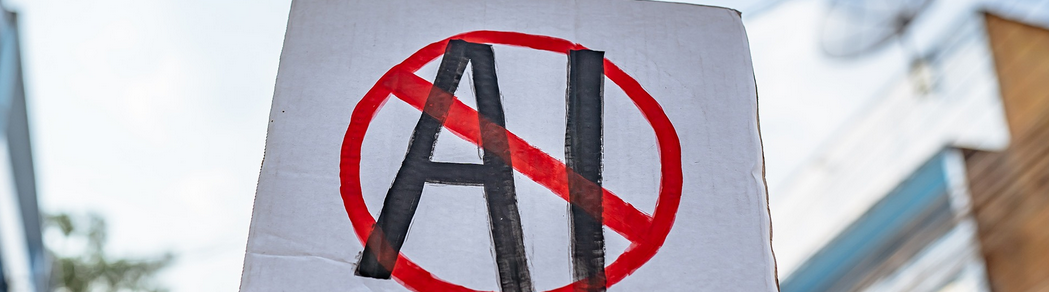

Why I Hate The IA Trend ?

I’ve always been passionate about computers. At first it was just tinkering with game consoles.

You know, you discover YouTube in 2007 and the internet, you get access to a ton of information.

It’s crazy, really you feel like everyone’s right there beside you, free.

That’s when I stumbled on hacking the first Xbox and the Wii, using DOSBox and all the possibilities that Xavbox offered for those who remember.

The whole hardware scene between 2005 and 2015 was insane mind‑blowing innovations and a real Moore’s Law in action, with ever more possibilities.

Around 2017 I switched from humanities studies to a technician diploma in IT.

What struck me was the sheer amount of stuff you have to learn and, above all, the level of the teachers and mentors.

The first one was a Linux fan Debian, to be precise and he knew every tiny step of the system, every component and why it existed.

In telecommunications, my network professor was equally involved, with a mountain of resources and an uncanny ability to transmit knowledge and troubleshoot.

Then I entered the working world for three or four years. Honestly, ten years ago writing a simple Bash or PowerShell script that actually worked took ages.

Everything was home‑grown, or bits of code glued together. Still, we learned a lot and helped each other to get things into production efficiently.

And none of that is ancient history; I’m far from being a veteran. After a second stint studying cybersecurity, I got a new perspective on education.

That was during the COVID period, everyone remote, phishing everywhere, attacks on infrastructure, fiber that kept dropping. All of that spiced up with Netflix and streaming that could choke a whole country’s bandwidth.

In that cyber school I already saw fraud. People weren’t very good, maybe even worse than the old‑school technicians. I mean, the fundamentals of infra and IT weren’t mastered.

Not to sound like an expert, but we’re talking basics that, without them, you can’t even administer a system, let alone defend it. The level had dropped. At first I thought it was a bias, clearly it’s easy to tell yourself “maybe I just got unlucky,” but the workplace proved me wrong again.

After a year in a pretty nice NOC doing BGP configs, I was hired into a SOC.

That’s where it flips yeah, we’re getting to the point and I discovered LLMs.

I’m not a tech fan per se, sure I like tech, but not the trends and hype of the moment.

To me, proven, well‑working things should be kept as long as they don’t pose a risk.

I think a lot of the supposed added value of certain technologies is just capitalist bullshit.

So let’s talk LLMs: how did we get here? How did we go from genuine mutual aid to answers like “I don’t know, ask Copilot”?

It’s questionable, which is why we need to unpack these questions.

Cognitive degradation

Using an LLM can save time, debug code, push tasks forward, translate text.

On paper the technology looks amazing, and many think web developers will be replaced or that “low‑level” jobs will disappear soon. What’s striking is how fast the hype was adopted by the market and how readily people accepted it.

Of course, companies want to make money, not quality, and they only care about growth revenue.

People use it, think they’re saving time, try to become “pro‑prompt engineers” believing it’s a future career.

In IT you now see requests like “generate me a Python script for this API” or “analyze and answer this task,” when you could have said “okay, look at the code, tell me where it’s stuck, explain the trade offs, give me optimization ideas.”

End up with crappy code the worst part is I’m not even a developer, but you can see it’s garbage.

Applications become unmanageable, a flood of junk to fix, absurd errors, because the brain is lazy. Yes, because we’re human, it can’t work.

If tomorrow I told you I could stop working because ChatGPT could do it for you, who would say no? As a result, junior devs can’t find work because IA‑driven workflows cost companies a fortune in tokens, professional prompt‑engineers lie about generating content they stole and claim credit. Seniors end up fixing the mess and saving the apocalypse that’s unfolding. Seniors may even change careers because of this disaster (or at least take a temporary break) and disappear, leaving no room for juniors to get their hands dirty on tech.

The internet and computers were supposed to preserve skill, but they’re losing it.

Cooperation failure and lack of overall vision

Cooperation is what’s kept humanity afloat.

The group is crucial. AIs block cooperation because an overloaded colleague will rather tell you to “ask Grok” than actually train you.

Since the AI isn’t a trainer and isn’t paid extra, you end up doing lessons with an LLM. That same steamroller teaches you half‑truths or outright falsehoods.

Cognitive biases stick with you for a long time. So everyone opts for simplicity. Oh, and the crazy part: you’re no longer allowed to say it takes you time.

Deadlines shrink—yeah, the boss thinks the AI will boost productivity by 200%, so you’ll do the work of three people. No more slacking; you have to grind, otherwise you become the last wheel on the carriage and can’t afford food.

What about human contact? Society becomes even more individualistic and less productive (real productivity that serves people, not wind‑chasing profit).

Pure capitalist product, I hate LLMs, the worst invention ever.

Internet pollution

This is the part that disgusts me most.

The internet, that beautiful invention that connects us, has turned into a battlefield.

Bots have hogged traffic for years; now we have to wade through AI‑generated articles.

Doing a search has become a curse, with every article sponsored and churned out by text models.

Information is bad and feeds those same AIs, which ingest poison and spit out false content that gets re‑ingested and poisoned again.

It’s hilarious a huge circus with no sense. Most people just use these services.

Artists lose their work; you can argue about ownership, but not when content is literally stolen, fed to megacorporations that make money off the backs of the little guy.

What’s this nonsense? Add deepfakes that take under ten seconds to generate, massive disinformation, fake videos, fake music…

Conclusion: read books! Oh no, they’ll be generated soon. Too sad...

Seriously, go decentralized, boycott anything with an LLM baked in.

Personal dataleak

Yeah, it’s funny, but the companies that eat your data and sell it are the same ones that can’t protect it.

Not a day goes by without a data leak making front‑page news on a bot‑generated outlet.

Governments want to do the same funny when we can’t even encrypt user data properly.

When noobs scrape data, it’s ridiculous. “It’s the super Russians with Kim Jong‑un,” they say.

We have to downplay it or we look like fools, and we have to sell cyber services anyway.

With endless scraping and leaking, everything gets ingested, so even with the best OPSEC you’re already easy to track.

It’s tragic, but it’s the beginning of the end for fundamental rights and free expression. Controlling your personal data is the only weapon against oppressive regimes.

Let that be a lesson, I hope we’ll manage to fight back.

All countries are radicalizing, so at worst we’ll break it all sooner.

Brain impact

Final act of the show: it wrecks learning capacity.

Paradoxically, students have all the resources and almost a tutor at home, yet ChatGPT is designed to keep things easy.

It’ll say “sure, you’re right” or mildly contradict you just enough to make you think you’re not that bad. Even if you’re terrible at the subject and need to hit a wall to learn, the AI feeds your ego and positively reinforces prompting. Learners will keep having the LLM write their assignments; opinions will be increasingly steered by the companies owning the models (already happened with Google, but now it’s worse).

They’ll have a single access point to information, centralized, ringing a bell.

Too easy to rewrite history, sway elections, manipulate groups, push violence. Studies in psychology are starting to surface, and it doesn’t look good. I pray we’ll be careful; cognitive impacts are dangerous, and many studies are emerging.

I hope we don’t ruin a generation and that these tools get regulated because they’re too powerful.

At work I also see people unable to search on their own, even though LLMs could help them find sources and cross‑reference info. That could be ultra‑interesting, but we default to the simplest route instead of digging deeper.

Remember: "quality beats quantity"

It’s not a stupid phrasen, it finally makes sense now, and it’s never been more true.